3 lessons from IBM on designing responsible, ethical AI

AI technology will affect all of human society - so it's crucial to put the work in to get it right.

Image: Gerd Altmann / Pixabay

Stay up to date:

Artificial Intelligence

- IBM is well underway on its mission to develop and advance ethical AI technology.

- A new report, co-authored by the World Economic Forum and the Markkula Center for Applied Ethics at Santa Clara University, details these efforts.

- Here are its main lessons for other organizations in this space.

Over the past two years, the World Economic Forum has been working with a multi-stakeholder group to advance ethics in technology under a project titled Responsible Use of Technology. This group has identified a need to highlight and share best practices in the responsible design, development, deployment and use of technology. To this end, we have embarked on publishing a series of case studies that feature organizations that have made meaningful contributions and progress in technology ethics. Earlier this year, we began this series with a deep dive into Microsoft’s approach to responsible innovation.

In the second edition of this series, we focus on IBM’s journey towards ethical AI technology. The insights from this effort are detailed in a report titled Responsible Use of Technology: The IBM Case Study, which is jointly authored by the World Economic Forum and the Markkula Center for Applied Ethics at Santa Clara University. Below are the key lessons learned from our research, along with a brief overview of IBM's historical journey towards ethical technology.

1. Trusting your employees to think and act ethically

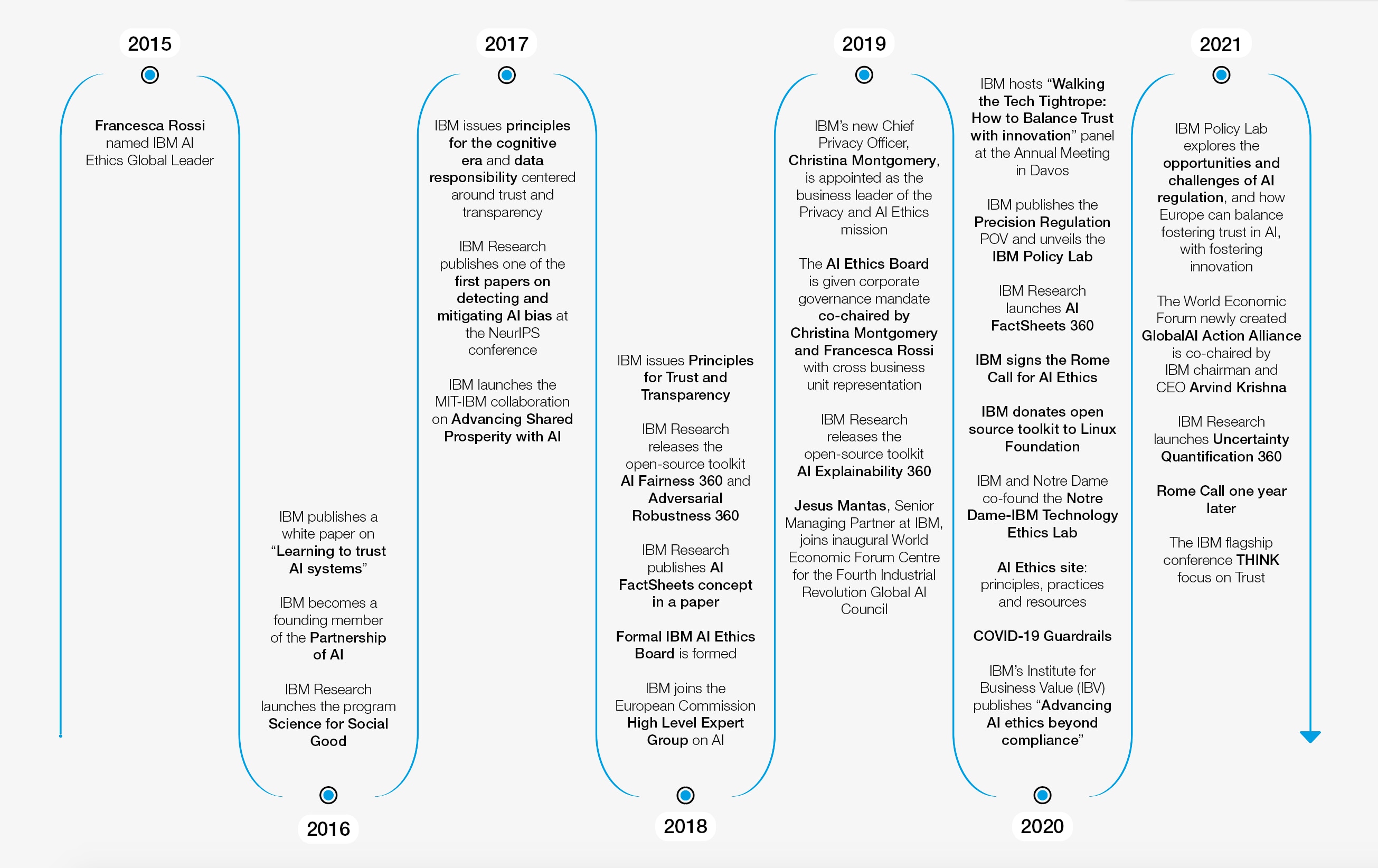

When Francesca Rossi joined IBM in 2015 with the mandate to work on AI ethics, she convened 40 colleagues to explore this topic. The work of this group of employees set in motion a critical chapter of IBM’s ethical AI technology journey.

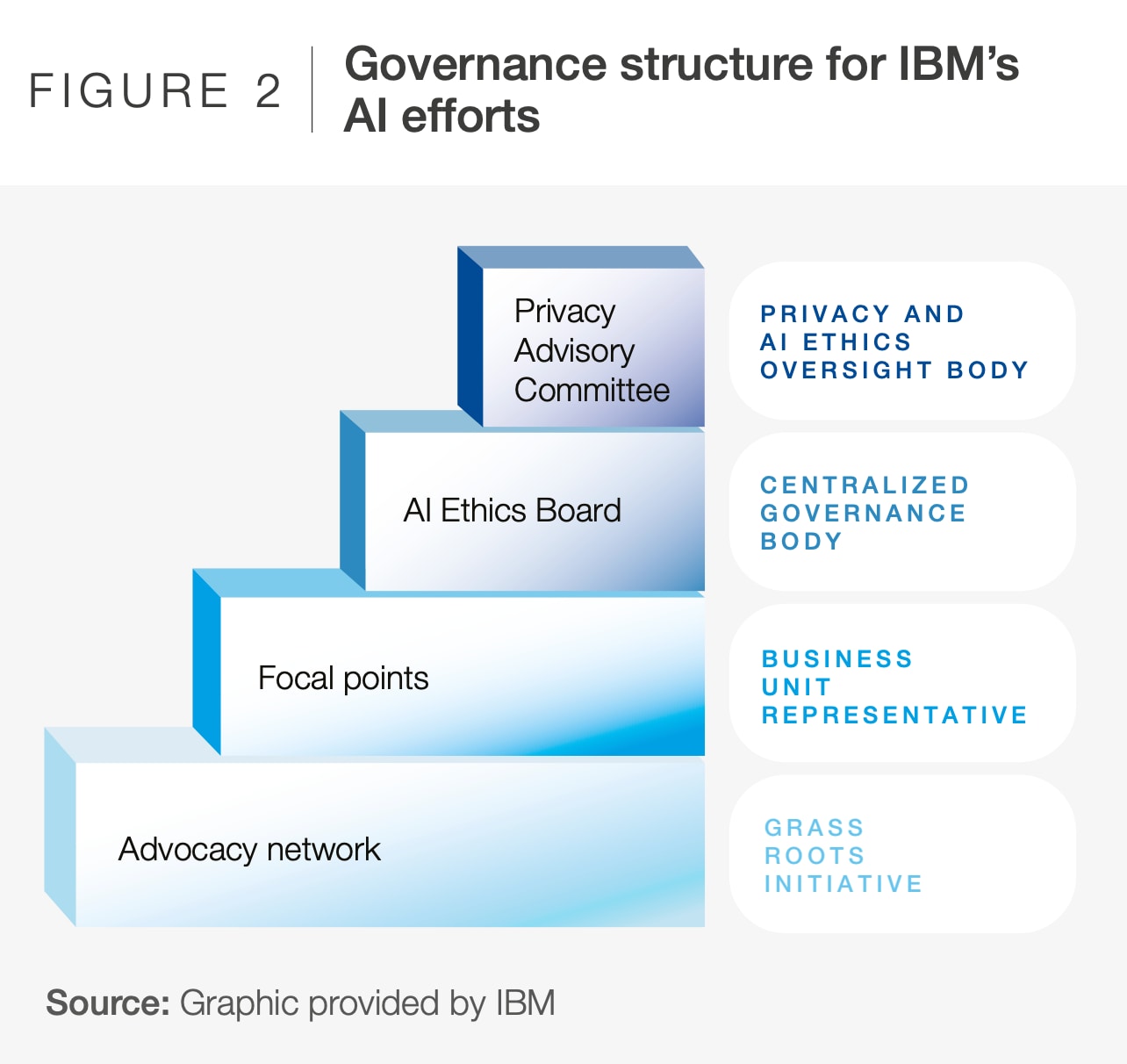

Initially, the group researched and published a paper titled Learning to trust AI systems, in which the company makes a set of commitments to advance its understanding and to put into effect the ethical development of AI. One of these commitments is to establish an internal 'AI ethics board', to discuss, advise and guide the ethical development and deployment of AI systems. With the leadership of Dr. Rossi and IBM’s chief privacy officer Christina Montgomery, this board is responsible for governing the company’s technology ethics efforts globally. However, a centralized ethics governance board is insufficient to oversee more than 345,000 employees working in over 175 countries. All employees are being trained in the 'ethics by design' methodology, and it is also a mandate for all of IBM's business units to adopt this methodology. The AI ethics board relies on a group of employees called 'focal points', who have official roles to support their business units on issues related to ethics.

There is also a network of employee volunteers called the 'advocacy network', which promote the culture of ethical, responsible, and trustworthy technology. Empowering employees is an important part of making technology ethics work at IBM.

2. Operationalizing values and principles on AI ethics

To operationalize ethics in an organizational setting, there must first be commitments made to values. To this end, IBM developed a set of 'principles and pillars' to direct its research and business towards supporting key values in AI. Implementation of these principles and pillars led to the development of technical toolkits so that ethics could penetrate to the level of code; IBM Research created five open-source toolkits, freely available to anyone:

1. AI Explainability 360: eight algorithms for making machine learning models more explainable

2. AI Fairness 360: a set of 70 fairness metrics and 10 bias-mitigation algorithms

3. Adversarial Robustness Toolbox: a large set of tools for overcoming an array of adversarial attacks on machine learning models.

4. AI FactSheets 360: to make AI models transparent, factsheets collect data about the model in one place; this includes examples of FactSheets as well as a methodology for creating them.

5. Uncertainty Quantification 360: a set of tools to test how reliable AI predictions are, which helps to place boundaries on model confidence.

Any organization can make principles or other ethical commitments, but they have to be operationalized to become a reality. These toolkits help IBM fulfill its own ethical commitments, and by making them open-source IBM hopes to help other tech companies, and the machine learning community more broadly.

3. Aiming for broad impact on AI ethics

IBM recognizes that it is not separate from the tech industry or the world. Its actions will affect the reputation of the industry – and vice versa – and, ultimately, the entire tech industry together will affect human society. All this is to say that the stakes are high, so it is worth putting in the effort to get it right.

One of the ways that IBM has been attempting to promote the broad positive impact of technology has been through partnerships with educational and research organizations and multistakeholder organizations.

Within education, in 2011 IBM launched P-TECH, a programme that promotes career-applicable skill-building for high school students while working with an IBM mentor. Eventually, they can participate in paid summer internships at IBM and enroll in an associate’s degree programme at no cost.

At the university level, IBM has several partnerships with schools, such as the Notre Dame - IBM Tech Ethics Lab, to support underrepresented communities in STEM subjects and their teachers, and to improve relevant curricula. By teaming up with research organizations, IBM is helping to train the next generation of technology professionals and creates communications channels, between academia and industry, which include promoting the discussion of ethical concerns from both sides.

IBM is a founding member of several multistakeholders organizations, including the Partnership on AI to Benefit People and Society (2017) and the Vatican’s 'Rome Call for AI Ethics' (2020). IBM also works on many World Economic Forum initiatives related to AI ethics, including the Global Future Council on AI for Humanity, the Global AI Council, and the Global AI Action Alliance.

Besides these institutional partnerships, individual IBM employees are also involved in the IEEE’s Ethics in Action Initiative, the Future of Life Institute, the AAAI/ACM AI, Ethics, and Society conference, and the ITU AI for Good Global Summit.

IBM finds it worth its time and money to support these many initiatives for at least three reasons. First, IBM is committed to educating people and society about how technology and AI work. A better-educated society and especially better educated decision-makers are capable of making better decisions regarding the future role of AI in society.

Second, IBM wants to gather input from society about the ways AI is affecting the world. In this way, IBM can be more responsive towards the social impact of AI and act more quickly to prevent or react to harms. This helps to make sure that AI is truly delivering on its promise as a beneficial technology.

Third, IBM and its employees are committed to developing, deploying and using AI to benefit the world. By making sure that society is educated about AI and that tech companies know about the immediate impacts of AI on society, AI will be more likely to be directed towards good uses.

More and more people are taking a longer look at ethics and responsible innovation – and in many cases, they and their organizations are making these ideas an integral part of their corporate culture. All of these efforts contribute to making AI a better – and therefore more trustworthy – technology.

Looking ahead

Although IBM’s size and scale allow it to invest significant resources in these important areas, the lessons learned from IBM’s journey around the ethical use of AI technology are relevant to any organization. As companies evolve and grow, it is unfeasible for leaders to oversee every aspect of their business. Leaders must trust and empower their employees to make ethical decisions. Leaders need to provide the principles, guidelines, training, tools and support that will enable their employees to feel empowered and ready to handle ethical issues. Lastly, the overall purpose of responsible technology is to deliver positive impacts to society. The scale and scope of the impacts will vary by organization, but the goal towards improving the state of society should be consistent.

As more organizations share their experiences in adopting ethical technology practices, there is an opportunity to investigate the similarities and differences in their approaches. Over time, there might be ways to measure their successes and failures, while analyzing the paths that led them to such outcomes. This effort is in the hope that more organizations can learn from the experiences of others so that everyone is adopting methods and tools for the responsible use of technology.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Emerging TechnologiesSee all

Plínio Targa

June 27, 2025

Ian Shine

June 27, 2025

Daegan Kingery and Agustina Callegari

June 26, 2025

Alexandros Pantalis and Ithri Benamara

June 25, 2025

Eric Mosley

June 25, 2025